My Projects

Effects of Rhetorical Strategies and Skin Tones on Agent Persuasiveness in Assisted Decision-Making

Amama Mahmood, and Chien-Ming Huang. 2022. Effects of Rhetorical Strategies and Skin Tones on Agent Persuasiveness in Assisted Decision-Making. In Proceedings of the ACM International Conference on Intelligent Virtual Agents (2022)

Speech interfaces, such as personal assistants and screen readers, employ captions to allow users to consume images; however, there is typically only one caption available per image, which may not be adequate for all settings (e.g., browsing large quantities of images). Longer captions require more time to consume, whereas shorter captions may hinder a user’s ability to fully understand the image’s content. We explore how to effectively collect both thumbnail captions—succinct image descriptions meant to be consumed quickly—and comprehensive captions, which allow individuals to understand visual content in greater detail. We consider text-based and time-constrained methods to collect descriptions at these two levels of detail, and find that a time-constrained method is most effective for collecting thumbnail captions while preserving caption accuracy. We evaluate our collected captions along three human-rated axes—correctness, fluency, and level of detail—and discuss the potential for model-based metrics to perform automatic evaluation.

Owning Mistakes Sincerely: Strategies for Mitigating AI Errors

Amama Mahmood, Jeanie W Fung, Isabel Won, and Chien-Ming Huang. 2022. Owning Mistakes Sincerely: Strategies for Mitigating AI Errors. In CHI Conference on Human Factors in Computing Systems (CHI '22). Association for Computing Machinery, New York, NY, USA, Article 578, 1–11. https://doi.org/10.1145/3491102.3517565

Interactive AI systems such as voice assistants are bound to make errors because of imperfect sensing and reasoning. Prior human-AI interaction research has illustrated the importance of various strategies for error mitigation in repairing the perception of an AI following a breakdown in service. These strategies include explanations, monetary rewards, and apologies. This paper extends prior work on error mitigation by exploring how different methods of apology conveyance may affect people’s perceptions of AI agents; we report an online study (N=37) that examines how varying the sincerity of an apology and the assignment of blame (on either the agent itself or others) affects participants’ perceptions and experience with erroneous AI agents. We found that agents that openly accepted the blame and apologized sincerely for mistakes were thought to be more intelligent, likeable, and effective in recovering from errors than agents that shifted the blame to others.

Crowdsourcing Thumbnail Captions via Time-Constrained Methods

Carlos A Aguirre, Amama Mahmood, and Chien-Ming Huang. 2022. Crowdsourcing Thumbnail Captions via Time-Constrained Methods. In 27th International Conference on Intelligent User Interfaces (IUI '22). Association for Computing Machinery, New York, NY, USA, 36–48. https://doi.org/10.1145/3490099.3511136

Speech interfaces, such as personal assistants and screen readers, employ captions to allow users to consume images; however, there is typically only one caption available per image, which may not be adequate for all settings (e.g., browsing large quantities of images). Longer captions require more time to consume, whereas shorter captions may hinder a user’s ability to fully understand the image’s content. We explore how to effectively collect both thumbnail captions—succinct image descriptions meant to be consumed quickly—and comprehensive captions, which allow individuals to understand visual content in greater detail. We consider text-based and time-constrained methods to collect descriptions at these two levels of detail, and find that a time-constrained method is most effective for collecting thumbnail captions while preserving caption accuracy. We evaluate our collected captions along three human-rated axes—correctness, fluency, and level of detail—and discuss the potential for model-based metrics to perform automatic evaluation.

How Mock Model Training Enhances User Perceptions of AI Systems

Amama Mahmood, Gopika Ajaykumar, and Chien-Ming Huang. 2021. How Mock Model Training Enhances User Perceptions of AI Systems. In Human Centered AI (HCAI) workshop at NeurIPS (2021), arXiv preprint arXiv:2111.08830.

Artificial Intelligence (AI) is an integral part of our daily technology use and will likely be a critical component of emerging technologies. However, negative user preconceptions may hinder adoption of AI-based decision making. Prior work has highlighted the potential of factors such as transparency and explainability in improving user perceptions of AI. We further contribute to work on improving user perceptions of AI by demonstrating that bringing the user in the loop through mock model training can improve their perceptions of an AI agent's capability and their comfort with the possibility of using technology employing the AI agent.

NASA Telerobotic Satellite Servicing Project

Amama Mahmood, Balazs P. Vagvolgyi, Will Pryor, Louis L. Whitcomb, Peter Kazanzides and Simon Leonard, 2020. Visual Monitoring and Servoing of a Cutting Blade during Telerobotic Satellite Servicing, In IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), 2020, pp. 1903-1908, doi: 10.1109/IROS45743.2020.9341485.

We propose a system for visually monitoring and servoing the cutting of a multi-layer insulation (MLI) blanket that covers the envelope of satellites and spacecraft. The main contributions of this paper are: 1) to propose a model for relating visual features describing the engagement depth of the blade to the force exerted on the MLI blanket by the cutting tool, 2) a blade design and algorithm to reliably detect the engagement depth of the blade inside the MLI, and 3) a servoing mechanism to achieve the desired applied force by monitoring the engagement depth. We present results that validate these contributions by comparing forces estimated from visual feedback to measured forces at the blade. We also demonstrate the robustness of the blade design and vision processing under challenging conditions.

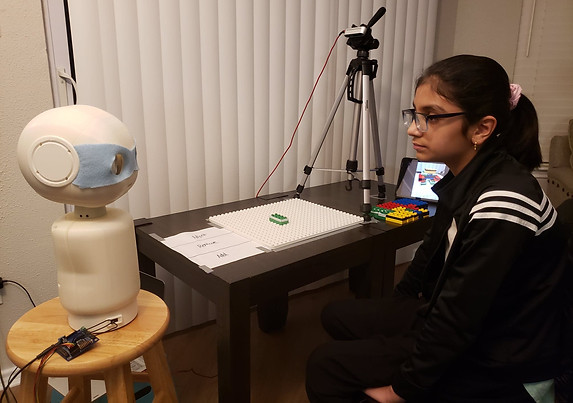

Child-Robot-Tutoring

M.S.E. Thesis. Intuitive Computing Lab, Johns Hopkins University

Advisor: Dr. Chien-Ming Huang

Designing a good fedback strategy in child-robot-turoting is essential for self-regulated learning. In human-human-interactions, implicit feedback techniques are employed to encourgae students while solving any task. Whereas, to the best of my knowledge, current robot tutoring systems mostly use explicit feedback strategies. I am interested in studying the impact of implicit and explicit feedback on one-on-one tutoring session where robot acts as a tutor. The proposed system consists of following:

Perception system: To track actions of the participant.

Intelligent Robot Tutor: Acts as a tutor to provide feedback based on perception.

Duplo blocks: Serves as the playground for the participant to solve designed spatial visualization task

Effect of Robot Tutor Coaching Levels on Trust and Comfortability of Kids

Course Project for Human-Robot-Interaction

The unique social presence of robots can be leveraged in learning situations to increase comfortability of kids, while still providing instructional guidance. Furthermore, motivating nature of robot tutor can create positive and supportive environment whereas responsive nature can bring out the element of trust. In this pilot study, we examined how coaching level i.e. varying motivating and responsive nature, of robot affects comfortability, satisfaction and trust in a one-on-one tutoring setting. Our results show that motivating and responsive nature of robot creates amicable environment that encourages kids to seek help through questions. Robot's presence and responsiveness gives sense of support to the child. Our pilot indicates that the robot tutor in responsive and motivation condition is preferred over rest coaching levels. Click here for the detailed report. Here is a short video showing robot actions and responses.

Brain Computer Interfacing

Neuroinformatic Lab, National University of Sciences and Technology and Signal, Image and Video Processing Lab, Lahore University of Managment Sciences

Advisor: Dr. Awais M. Kamboh and Dr. Nadeem A. Khan

Brain Computer Interfaces (BCIs) serve as an integration tool between acquired brain signals and external devices. Precise classification of the acquired brain signals with the least misclassification error is an arduous task. Existing techniques for classification of multi-class motor imagery electroencephalogram (EEG) have low accuracy and are computationally inefficient. I came up with a classification algorithm, which uses two frequency ranges, mu and beta rythms, for feature extraction using common spatial pattern (CSP) along with support vector machine (SVM) for classification. The technique uses only four frequency bands with no feature reduction and consequently less computational cost. The implementation of this algorithm on BCI competition III dataset IIIa, resulted in the highest classification accuracy in comparison to existing algorithms. A mean accuracy of 85.5% for offline classification has been achieved using this technique. Click here to access the published paper. I presented this paper at the “International Conference of the Engineering in Medicine and Biology Society” held in Korea in July, 2017.

My team and I was able to demonstrate a working mobile robot using EEG signals as control signal. Click here to access detailed report of our final year project and here is a short video showing real-time control using EEG signals.

Afterwards, I focused on feasibility analysis of existing multiclass motor imagery systems for real-time applications such as mind-controlled wheelchair and prosthetics. I presented this analysis based on accuracy and computational complexity of various feature extraction methods and classification algorithms featured in the existing solutions.